The AI revolution that nobody seems comfortable with -- for now

"Tell me how this ends" is --for now -- trumped by the question "Tell me how this works"

The ongoing disparity in headlines is striking: AI is either going to change everything for the better or is a complete waste of money and resources and will only foster economic tumult and social unrest.

NYT: Powerful A.I. Is Coming. We’re Not Ready.

FUTURISM: Majority of AI Researchers Say Tech Industry Is Pouring Billions Into a Dead End; "The vast investments in scaling... always seemed to me to be misplaced."

As “cones of plausibility” go, that’s a wide spread.

Let me parse some of the latest:

TECHNOLOGY MAGAZINE: SAP: Why Executives are Starting to Favour AI Over Humans

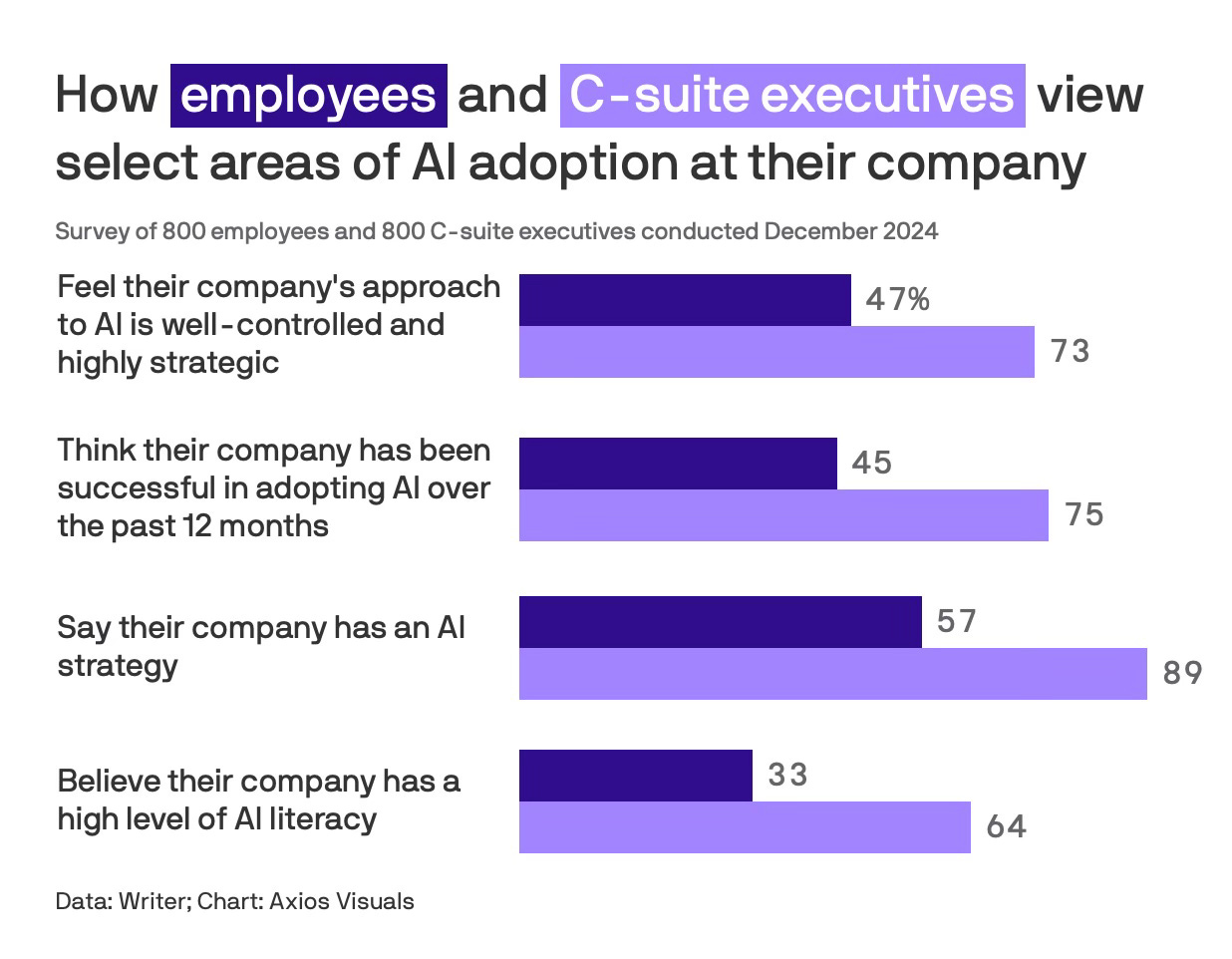

The key finding of this sizable survey of C-Suite execs:

SAP also found that 74% of executives place more confidence in AI for advice than in family and friends – and this shift towards algorithmic decision-making is particularly evident in larger corporations.

In companies with revenue of US$5bn or more, 55% of executives report that AI-driven insights have replaced or frequently bypass traditional decision-making processes.

Replacing and bypassing traditional decision-making processes: that’ll make people uncomfortable right off the bat.

While we are told that the C-Suite already considers AI part of the “trusted inner circle,” the rest of the company seems … downright hostile.

CIO DIVE: C-suite leaders grapple with conflict, silos amid AI adoption

AXIOS: AI is "tearing apart" companies, survey finds

Two-thirds of company execs say that, for now, AI is proving to be far more divisive than uniting. Execs are “massively disappointed” and Gen Z rank-and-file admit to the occasional vindictive sabotage.

Around 1 in 3 employees on average are pushing back, refusing to use AI tools or ditching AI-related training.

All natural, in a sense, and yet disruptive in bad ways.

Class consciousness seems to be brewing:

Which suggests this is fundamentally about job security, thus the pushback on trust:

Around half of employees say AI-generated information is inaccurate, confusing and biased.

Whom do you trust?

As I have noted time and again here: XAI (Explainable AI) is the long-pole in the tent of AI’s practical evolution (i.e., adoption).

I am reminded of the reality that elevators, when they first appeared, so scared people that it was necessary for decades to keep operators in the car despite their — for the most part — not needing to be there. That kind of hand-holding exercise is going to be common.

Then there’s the larger argument I make about the Centaur Solution: AI beats humans most of the time but AI + humans beats AI virtually all of the time.

UNITE AI: New Survey Finds Balancing AI’s Ease of Use with Trust is Top of Business Leaders Minds

My frequent intellectual AI collaborator Steve DeAngelis likes to say that the future of corporate leadership in an era of rapid AI adoption will center on being able to explain AI in a 360-degree fashion:

How does it operate? (going from a black box to a glass box, as Steve says)

Where does it comes from? (inputs, pedigree)

Where it is best applied? (outputs, applicability)

And how should it interact with humans? (Centaur models)

… to name just four.

Done right, DeAngelis argues, we enjoy an AI jobs boom that stretches toward the distant horizon (see his Schwab Network interview here):

First, in the preparation of our economy and society and government to take advantage of all these capacities (think of the potential construction boom alone regarding water and power management); and

Second, in recasting how we interact with all these AI capabilities and how that dynamic allows humans to elevate their roles and responsibilities from what used to be all about maintaining the accuracy and completeness of the System of Record (Enterprise Resource Planning [ERP] systems like that offered by SAP) to the higher-order System of Intelligence® layer (an Enterra Solutions’ core offering) that serves as Explainer, Decoder, and Translator between that System of Record and the outside world — increasingly described as the Internet of Things (IoT).

How those two systems interact is what gives us the AI of Things (AIoT) — the Promised Land.

I have found this explanation very empowering, because, until I came across it, I just could not figure how ERPs would play in this AI future.

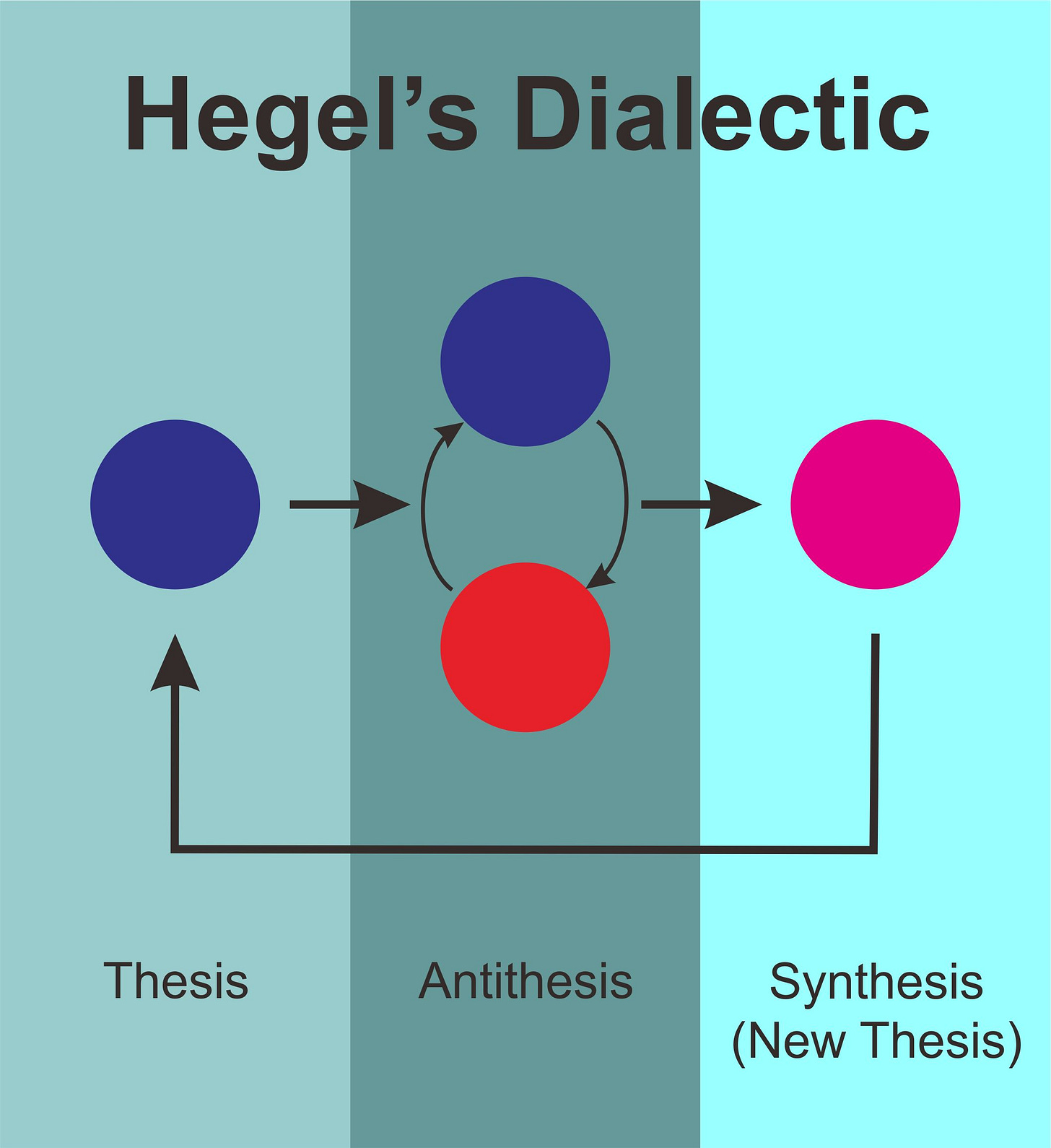

It also smacks of a Hegelian dialectic (my first true love):

Economic base (System of Record [SOR]) plodding along, having achieved most of what it can achieve within an enterprise (linear optimization leveraging classic computing capacity) — as our long-time “thesis”

While the IoT revolutionizes the social, economic, and political superstructures, creating the opposing condition of Big Data beyond understanding, much less control — our radical new “antithesis” that scares the hell out of us (“solvable” by Black Box AI engines that instinctively strike us as akin to magic)

Thus, synthesis arrives in the form of that System of Intelligence® layer that serves as Decoder, Translator, and Explainer between an enterprise’s internal world of decision-making and its pervasively sensored operating landscape (aka, the market, theater of operations, etc.).

So, while the SOR can be efficient in handling structured, predictable problems with well-defined constraints (linear optimization), IoT-generated Big Data often requires nonlinear optimization to manage complex, dynamic relationships inherent in real-time, high-dimensional datasets (chaos, as we have long dubbed it, in a very beyond-here-be-dragons kind of way).

Again, “visually” helpful to this visual thinker: you have your ERP inside your enterprise, you have the IoT world beyond, with all that Big Data, and the question is, how does your Internal (ERP) interface best with that External (IoT).

This model essentially comprises different data realities:

Inside the wire, so to speak, you and your ERP deal with Known Knowns (System of Record).

Across the wire, you have to manage — as a baseline — the cyber threat (Known Unknowns).

Beyond the wire, you must access, process, and manage that universe of IoT-generated Big Data full of Unknown Unknowns to be discovered, responded to, and addressed. Until you get a grip on this universe and successfully interface it with your own, you’ll tend to “surrender” to the notion of a volatile, uncertain, complex, and ambiguous (VUCA) environment that, unless maturely engaged, is likely to keep you in a defense crouch. Master it, and you’re into Industry 4.0.

Today, per Steve’s analysis, we tackle that synthesis/interface/decision-making space (between enterprise and market/world outside) using AI as it is presently generated by classic computing capacities. Where we collectively reach an inflection point is when computing successfully branches off into Quantum Computing (beware the hype-filled claims of today), which allows for computational breakthroughs when it comes to the hardest-of-hard decision-making problems, known as NP-Hard problems (NP standing for nondeterministic polynomial time).

Nondeterministic Polynomial Time, commonly abbreviated as NP, is a fundamental concept in computational theory and complexity science. It refers to a class of decision problems for which a "yes" solution can be verified by a deterministic Turing machine in polynomial time.

Google ai

[At this point, Tom/Icarus loses consciousness and starts plummeting back to Earth, wings aflame.]

But that was exciting, huh?

DeAngelis’s efforts to school me on this stuff continues … but now, at least, I understand why Steve needed to found a second high-dimensional math-crunching company of “beautiful minds” in Princeton NJ (known as Massive Dynamics) to fuel Enterra Solutions (in which — full disclousure — I retain a very-modest-but-meaningful-to-me equity position after a half-decade of executive service in the company’s first decade) as it invented and marketed its Autonomous Decision Science® Generative-AI platform (within which the System of Intelligence® resides).

Steve founded Massive Dynamics years ago because he foresaw this moment in history, along the advanced math capabilities required for navigating this computational collision between enterprises (with their SORs/ERPs) and the emerging IoT.

Steve and I bonded two decades ago over our shared passion for understanding history in terms of Grand Narratives: Steve being more adept (by far) on the economics and technology, while I am stronger on the politics and the security.

Why we thus fit as intellectual partners: Recall my notion, first voiced in the Pentagon’s New Map (understanding that Steve and I first met in 2004, the year of its publication):

Technological advances tend to race ahead of security capacities

Economic experimentation tends to race ahead of political understanding — much less regulation.

These are scary dynamics and yet, they constitute the “secret sauce” of market democracies that are confident enough and competent enough to let their entrepreneurs — for the most part — run wild and free.

This is why and how we beat China in the AI race: technological revolutions cannot be planned — much less centrally.

In a very dialectical manner, when technological models outstrips security realities, a massive rule-set gap is revealed. The same happens when economics outstrip politics. Indeed, the two rule-set gaps tend to open up under very similar conditions (eras of great advancements).

So, over time, the thesis and antithesis invariably diverge to the point of crisis, when, typically, some shocking event reveals all (like a 9/11 or the 2008 financial crisis or COVID-19 or Luke, I am your father), triggering a rapid rule-set reset (synthesis) to restore order to the Force.

Reconnecting technology to security (re-synthesizing that bond), along with reconnecting economies to politics, are thus the primary intellectual and applied challenges of any era of rapid advancement/transformation, such as we endure today — particularly as we slouch toward Singularity.

And yes, this is typically where I lose consciousness as I seek to process and broad-frame what DeAngelis and his small army of Beautiful Minds have developed to-date, and what they’re moving toward over time.

Check out the below podcast Steve recently posted — not the weaker first one-third but the mind-stretching rest when both he and his interlocutor begin their mind-meld on AI at 18:30.

I found the podcast extremely helpful on several levels as I seek to get smart on AI, Quantum Computing, et. al.

And I’m spent.

I apologize if this post got too inside my head, but, since this is basically a workspace for me, sometimes ... it just has to be this way.

Bird gotta fly!